Managing the Risks of Generative AI: Achieving Compliance Across Use Cases

The potential impact of generative AI on financial services is almost impossible to compute. While it is similar to traditional machine learning approaches that use sophisticated pattern matching, generative AI represents the first time machines can understand, generate and reason over human language. In short, it will completely change how we search, categorize, alert and investigate unstructured information. However, managing the risks of generative AI is not a simple task.

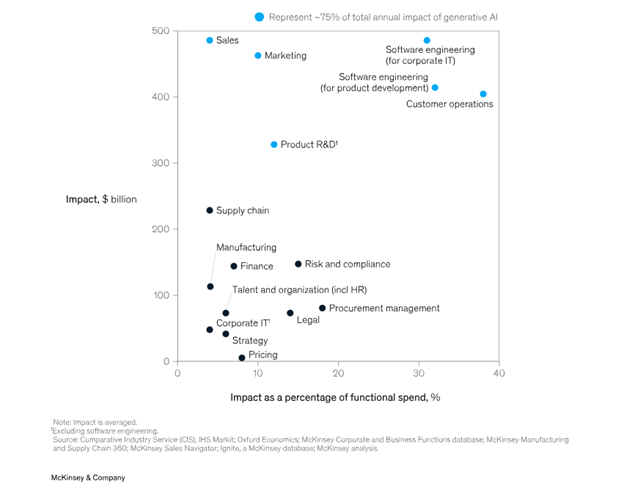

In terms of its impact on functional spending, a recent survey from McKinsey & Company estimates that risk and compliance will be impacted to the tune of $250B by generative AI.

As McKinsey noted, “many of the work activities that involve communications, supervision, documentation and interacting with people in general have the potential impact to be automated by generative AI, accelerating the transformation of work in industries like technology.” When also considering the multitude of ways that generative AI may be leveraged in sales and marketing functions, the potential impact grows.

Nevertheless, within risk and compliance, many firms have indicated that they are looking for validation points to enable business executives to see where potential benefits of generative AI can outweigh the risks. Until then, many firms are attempting to prohibit employees from using publicly accessible models. Firms are also stepping up due diligence of existing internal and third-party applications to understand model inputs and the data protections supported by those providers. The enormous potential of generative AI will cause this posture toward internal development to evolve rapidly.

The use cases where we expect firms to see greatest potential from generative AI include the following:

- Know your customer (KYC): KYC may be an early targeted use case in using generative AI for screening and conducting customer investigations. More broadly, customer-facing uses of AI-enabled applications such as chatbots are already common among financial institutions. Generative AI is likely to be leveraged to identify potential violations with regulations governing communications with the public.

- Market abuse: Generative AI can improve detection quality in communications surveillance and expand the breadth of coverage in conducting employee investigations.

- Customer protection: There’s an opportunity to use generative AI in investigating market suitability issues as well as compliance with requirements under newly updated SEC marketing rules.

- Legal: Legal teams have been a consumer of earlier flavors of AI via technology-assisted review (TAR) and predictive analytics for early case assessment and legal document review within e-discovery and investigations.

- Anti-money laundering (AML): Generative AI can help in the automation of filing of suspicious activity reports (SARs) as well as in conducting transaction analysis.

- Controls: Regulation of generative AI is moving rapidly, and generative AI may be useful to help firms to scan and monitor for regulatory updates and be able to map controls to those updates.

Regulatory perspectives on generative AI

Regulators have been watching generative AI closely, and new guidance is almost certain to occur over the coming months. They have, and will continue to, target a wide variety of risks ranging from cyber fraud, cyberattacks, identity theft, investor protection, introduction and management of biases, and a variety of other topics.

When it comes to managing the risks of generative AI, several regulatory perspectives have already been offered, including the following:

- Security and Exchange Commission (SEC): The SEC has provided several public comments about generative AI, with Chairman Gensler warning of its potential to impact “financial market stability” given its ability to attract fraud and “conflicts of interest.” Firms will no doubt struggle in attempting to ensure that investor interests are being protected and balanced against the interests of the firms. This is the subject of an SEC sweep of activities happening into October 2023.

- Biden Administration Executive Order on Artificial Intelligence: This is a broad executive order proposing standards for AI safety and security, focusing on consumer privacy protection.

- EU AI Act: This publishes the first regulation of AI, providing several consumer and individual protections according to specific risk categories.

- National Institute of Standards and Technology (NIST): A number of works have been published attempting to define standards to govern the use of AI, including risk management frameworks and in conducting research into what the organization considers ‘trustworthy’ AI technologies.

- Federal Trade Commission (FTC): The FTC is currently conducting an investigation of OpenAI’s data protection capabilities. The goal is to ensure that information used to develop and train models is appropriately managed, that information ownership rights are not questioned, and that open AI is properly governing the data protection and data privacy obligations.

- Office of the Comptroller of the Currency (OCC): There is an ongoing investigation of the use of AI and its potential impact on discrimination being built into banking algorithms.

- Consumer Financial Protections Bureau (CFPB): The CFPB issued a spotlight on the use of chatbots in banking based upon an increase in consumer complaints about the difficulty of resolving disputes. The CFPB noted that all top 10 commercial banks in the US are using chatbots to engage with customers.

It is almost certain that financial regulators will issue additional regulatory guidance. We can also safely assume that data privacy authorities and US federal and international government bodies will continue to address these and other common areas of concern, including model explainability, data privacy protections, and model risk management.

Looking toward the future

Managing the risks of generative AI will be a big task for compliance and risk teams. However, staying in step with the technology as it is adopted by the business is about more than mitigating regulatory risk. It is an opportunity to apply good governance principles, enabling your business to innovate and grow. A forward-thinking approach to compliance understands that establishing processes that adhere to regulatory requirements go hand-in-hand with meeting business goals.

The world is past the “toe-dipping” stage with AI and machine learning. Just as fast as businesses across all industries are adopting AI technology, regulators will be rolling out new requirements and issuing hefty new fines for non-compliance. Stay tuned for part 2 of this Generative AI and Compliance series to find out how to govern the output of generative AI.

Share this post!

Smarsh Blog

Our internal subject matter experts and our network of external industry experts are featured with insights into the technology and industry trends that affect your electronic communications compliance initiatives. Sign up to benefit from their deep understanding, tips and best practices regarding how your company can manage compliance risk while unlocking the business value of your communications data.

Ready to enable compliant productivity?

Join the 6,500+ customers using Smarsh to drive their business forward.

Subscribe to the Smarsh Blog Digest

Subscribe to receive a monthly digest of articles exploring regulatory updates, news, trends and best practices in electronic communications capture and archiving.

Smarsh handles information you submit to Smarsh in accordance with its Privacy Policy. By clicking "submit", you consent to Smarsh processing your information and storing it in accordance with the Privacy Policy and agree to receive communications from Smarsh and its third-party partners regarding products and services that may be of interest to you. You may withdraw your consent at any time by emailing [email protected].

FOLLOW US